Having alerts sent to a channel like Slack, E-Mail or Pagerduty is easy configured in OpenShift.

Although there is an option to configure a webhook as a receiver type, it is not possible to use this for a discord webhook.

Setup Discord

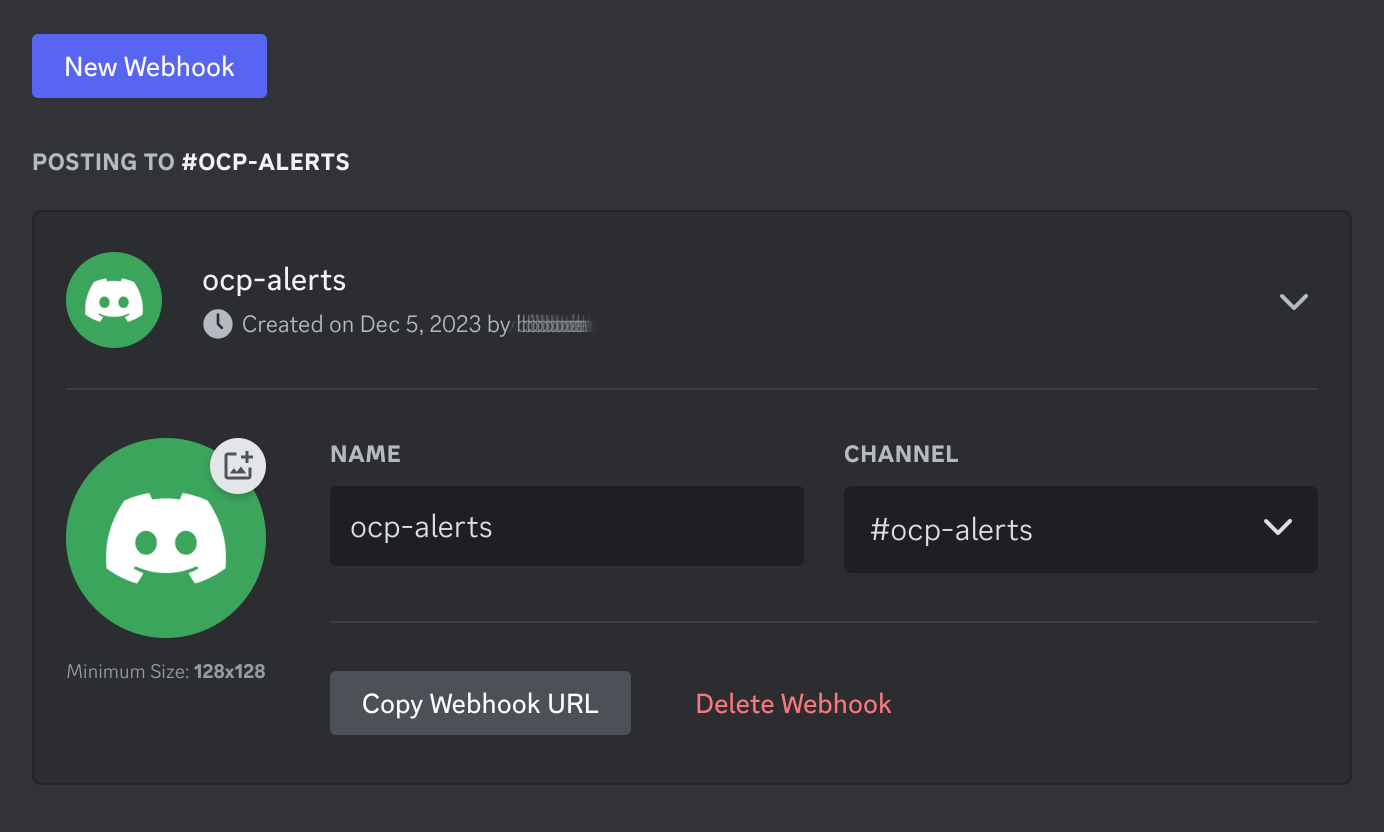

To use Discord as an alert target, you need to create an own instance. In that instance, you create a webhook as followed.

- Open

Server Settings⇾Integrations⇾Webhooks - Click

New Webhook -

To configure the webhook you have to click on the angle bracket

Here you can set the

Nameof the Bot and theChannelwhere the messages should be sent to Copy Webhook URLby clicking the button of the same name

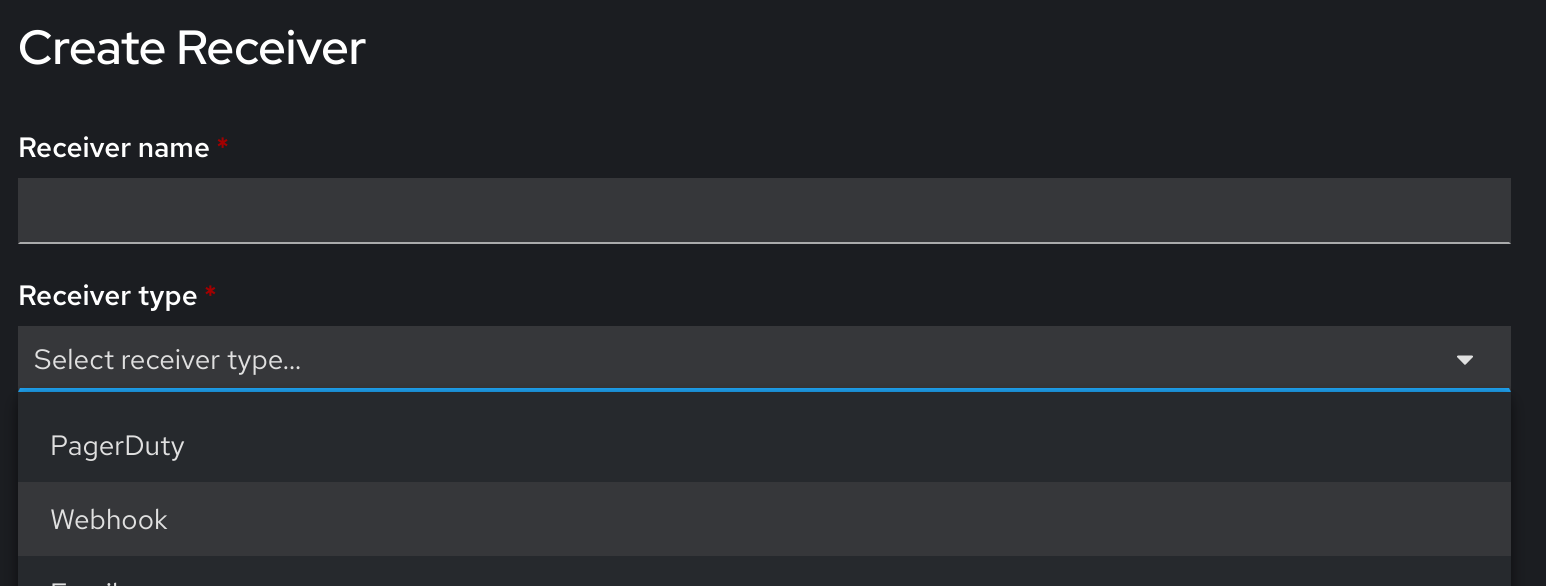

Setup Alertmanager Receiver

As it is not possible yet to configure discord as a receiver type, you need to configure it in the YAML view in the Web UI or directly via console as followed.

-

Export the existing configuration

$ oc -n openshift-monitoring extract secret/alertmanager-main --keys=alertmanager.yaml alertmanager.yamlThis will create a file

alertmanager.yaml, which is used for the next step of configuration. -

Add a discord receiver type to your receivers

receivers: - name: default discord_configs: - webhook_url: >- <Webhook URL> - name: WatchdogReplace

<Webhook URL>with the one you copied in while creating the webhook in the step before. -

Add message template

We define a message template for

discord_configs.receivers: - name: default discord_configs: - webhook_url: >- <Webhook URL> message: |- {{ range .Alerts.Firing }} Alert: **{{ printf "%.150s" .Annotations.summary }}** ({{ .Labels.severity }}) Description: {{ printf "%.150s" .Annotations.description }} Alertname: {{ .Labels.alertname }} Namespace: {{ .Labels.namespace }} Service: {{ .Labels.service }} {{ end }} {{ range .Alerts.Resolved }} Alert: **{{ printf "%.150s" .Annotations.summary }}** ({{ .Labels.severity }}) Description: {{ printf "%.150s" .Annotations.description }} Alertname: {{ .Labels.alertname }} Namespace: {{ .Labels.namespace }} Service: {{ .Labels.service }} {{ end }}This template is needed as the Discord API has a message size limit which will return an error 400 if the message send is to long. Reaching this limit is not that difficult for Prometheus alerts, so we reduced the message size to the bare minimum.

-

Update your alertmanager configuration

oc -n openshift-monitoring create secret generic alertmanager-main --from-file=alertmanager.yaml --dry-run=client -o=yaml | oc -n openshift-monitoring replace secret --filename=-The

alertmanager-main-0pod will recognize the change of thealertmanager.yamland reload the configuration.You can monitor if the configuration was reloaded by observing the logs of the pod

$ oc -n openshift-monitoring logs -f alertmanager-main-0 ts=2023-12-27T17:51:43.531Z caller=coordinator.go:113 level=info component=configuration msg="Loading configuration file" file=/etc/alertmanager/config_out/alertmanager.env.yaml ts=2023-12-27T17:51:43.531Z caller=coordinator.go:126 level=info component=configuration msg="Completed loading of configuration file" file=/etc/alertmanager/config_out/alertmanager.env.yaml